Originally published on 2025AD - The Platform for Automated Driving

The challenge is clear: in an automated vehicle, human and machine must form a relationship. But just how? 2025AD attended the expert conference Car HMI USA 2017 in Detroit – and found surprising and unconventional answers.

In a way, Detroit is a very symbolic place to host a conference on the future of driving. In the heydays of the American car industry in the mid-20th century, the city was prospering. Ever since then, a long and slow decline of the industry accompanied a long and slow decay of Motor City. But since the end of the financial crisis, the U.S. “Big Three” – Ford, GM and Chrysler, have bounced back. And so has Detroit. Walking through the city these days, you can sense an optimistic mood. Public parks are being refurbished, urban areas are getting a long needed renovation. And while the city still lacks a functional public transportation system, people are happily hailing their Uber cab to get from one bar to the next. Or to Dearborn, a Detroit suburb.

Not only is Dearborn home of Ford’s worldwide headquarters. Last week, it was also home to Car HMI USA 2017 – an expert conference on user experience in the vehicles of the future. With cars becoming increasingly connected and automated, how will humans and machines interact? And how can a safe and comfortable driving experience be achieved? Those were the central questions high-ranking industry experts from OEMs, suppliers and science discussed.

Creating a Human Robot Relationship

One common theme dominated the agenda as well as the discussions during coffee breaks: trust comes first. Only if that is given, users will feel comfortable in an automated vehicle. Or as Cyriel Diels, a human factors researcher at Coventry University, put it: „Car and user must form a relationship - we need to evolve from Human Machine Interaction (HMI) to Human Robot Relationship (HRR).”

That is especially true since car drivers today obviously feel overwhelmed by the complexity of current HMI technology. To drive home his message, Ford manager James Forbes presented the evolution of Ford’s explorer vehicle between 1998 and 2017: the number of steering wheel buttons more than quadrupled to 22.

Do customers really understand them? The answer is probably no. “98% of drivers don’t understand all dashboard lamps,” said Ketan Dande, Senior Diagnostic Software Engineer at Faraday Future. No wonder: U.S. car buyers on average spend only a couple of minutes talking to the vehicle dealer before the purchase – certainly not enough time to explain all HMI features. And since it is not likely drivers will thoroughly study the manual before using the car, one conclusion must be: the HMI has to be intuitive and easy to use – especially in critical situations.

Level 3 Automation: The Nightmare of all Carmakers

One critical use case heavily discussed at the event: the transition process between manual and machine driving in semi-automated cars. Level 2 automation can already be found on our roads today, for instance in Tesla’s Model S. It has already proven itself to be very tricky. While the car is able to take over all driving tasks for defined use cases, the driver must constantly monitor the road – which many users not seem to take serious. Cars with level 3 automation are also able to perform all driving tasks in certain situations, for example in smooth traffic on the highway. However, the driver doesn’t need to monitor the road at all times anymore, just needs to be ready to resume control. If the car gets into a situation beyond its capabilities, it must notify the driver so he can take over.

How can you keep the driver in the loop? You could feel an almost tangible aura of uncertainty at the conference on how to solve this task. What makes it so challenging? The looming danger is mode confusion – a problem known from aviation. The driver must receive clear and comprehensible information who is in charge of driving at all times. A recent study showed that it took drivers in transfer conditions four to six, sometimes up to 16 seconds to anticipate a latent hazard.

Joe Klesing, Executive Director Autonomous Steering & Comfort at supplier Nexteer, suggests a threefold approach. First, using in-vehicle cameras for gaze and head tracking to determine if the driver is ready to take over. If he fell asleep or has turned around to feed the kids, he clearly needs an urgent auditory appeal. If his eyes are focused on the road anyway, a less pressing sound could be sufficient.

Second, a head-up display directing attention towards the potential hazard. This makes it transparent for the driver why he needs to take over. And third, a steering wheel retracting while in autonomous mode. If the driver wants to resume control, he must actively pull the steering wheel so it moves back towards the driver. With this haptic cue, mode confusion can be avoided.

Distracted Driving - a modern Curse

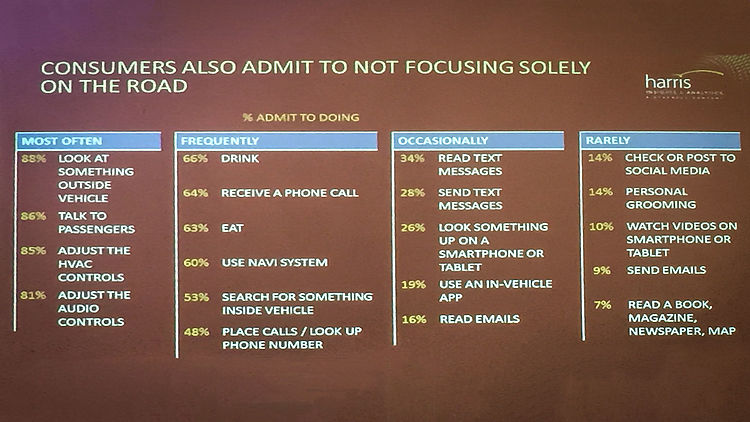

In that context, another problem that needs to be tackled is distracted driving. According to NHTSA data, almost 3,500 Americans were killed in 2015 by distracted driving – and the number further rose in 2016. Distractor number one: the smartphone. Risk group number one: teenagers and young adults. That is why all OEMs and suppliers are looking for ways to reduce that hazard source.

Interestingly, it seemed a foregone conclusion among conference speakers that teenagers will not be willing to give up their phone while driving. According to Carl D. Marci, Chief Neuroscientist at Nielsen, digital natives on average switch devices 27 times in one hour, for instance between the television and their smartphone. “People have developed new habits in the living room that they won’t drop in the car,” Marci stated. The consequence: tests have shown that in surprising takeover situations, it takes people longer to react if they were using their smartphone at that moment. A solution supplier Valeo suggests: smartphone screen mirroring integrated into the instrument cluster and operable through the steering wheel. With the screen in a higher viewing field and the hands free, this accelerates the driver’s reaction.

Will we skip Level 3 entirely?

While all these suggestions might facilitate the handover process, level 3 automation is still considered a tough nut to crack. Too tough? Most industry insiders at Car HMI USA got a rather stern look when asked about this issue. Who will be liable if a level 3 vehicle causes an accident? Driver or OEM? “The first major accident in the U.S. is going to be a big game changer,” said a senior engineer of a large OEM during a workshop, indicating that courts might have to find an answer to that question. To make matters even more difficult, international traffic authorities are expected to push for common standards for level 3 takeover processes. A consumer from Europe should intuitively be able to use a level 3 car in Asia or America and vice versa. A solution that is being seriously discussed: skipping level 3 entirely - a step that Google . “Until fully autonomous cars are deployed, we might have level 2 in urban areas and level 4 on highways,” said Oliver Rumpf-Steppat, Head of Product Requirements, Development & Connected Drive at BMW North America. Volvo and Ford have already announced they will skip level 3, with other OEMs expected to follow suit.

Once we reach level 5, new challenges of designing a car will arise. Warren Schramm, technical director and design consultancy Teague, questioned a 120 year old basic assumption of the car industry: that cars are built for the driver. “We will have to ask ourselves: what do we build for, if not driving?” Once steering wheel, the separation of seats and a middle console become obsolete and electric drivetrains are standard, much more space will be available - as recently demonstrated by Volkswagen’s Sedric concept vehicle.

Bold Business Ideas for Driverless Car Services

“The cabin needs to be completely reconfigurable. Flexibility is paramount,” said Schramm. He predicted that virtually any mobility service will be possible with purpose built vehicles. “Go to sleep, wake up in Vegas,” he called his idea for a rolling hotel room. “Or what if you could hail your shopping experience – a driving boutique.” Hairdresser or dentist appointments, teleconferences – there are countless possibilities to make use of the time gained during the ride. Schramm presented his most unconventional idea with a mischievous smile: “What if TSA picked you up?” The idea: Why not use the ride to the airport to get the security check done in the car? This self-driving shuttle would be equipped with security staff, passport and body scanners. This may sound a little outlandish at first. However, knowing how time-consuming these security checks are, it is not hard to imagine finding customers for this business idea.

For that to happen, one very human problem will need to be overcome in an autonomous car: motion sickness. If people don’t steer themselves anymore, it becomes harder for them to anticipate where they are actually going. Adding to that are tasks like reading in the smartphone and alternative seating arrangements in the car. Schramm suggested implementing frosted glasses: “If people are less aware of the motion, sickness can be greatly reduced.”

For the moment, this admittedly seems like a distant problem. After two lively days, the conference ended with a spirit of optimism. “The key user experiences are the moments you fall in love with your car,” Ford Manager James Forbes had said. The challenges may not be small, but the knowledge is there to create HMIs that people will embrace. Good prospects for the automotive industry. And therefore, good prospects for Detroit – the Motor City.

Skipping level 3 automation? TSA picking you up? What do you think of the expert ideas presented at Car HMI USA 2017? Share your thoughts in the comment section!