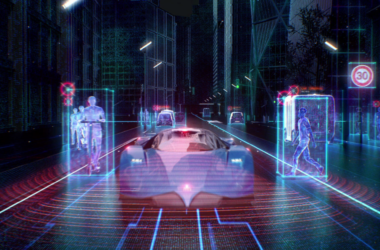

Real-world data is often thought of as the pinnacle of information – the primary details autonomous vehicles need to advance toward deployment. It may not provide a complete picture, however, creating massive gaps in features and functionalities that can be tested accurately.

Anyverse, a data solution for perception-based robotics, is tackling this problem head on. The firm is working to overcome the hurdles of autonomous vehicles development by generating physics-accurate datasets with challenging weather and lighting conditions just like in real life.

Victor Gonzalez, founder and CEO of Anyverse (and its parent company, Next Limit Technologies), said that yellow lights – a rather simple traffic signal for humans – have proven to be a surprising challenge for self-driving cars. He explained that the normal solution has involved actual traffic light images and data, but that is not nearly enough to complete the learning curve of AVs. Yellow lights, for example, occur much less frequently (and are displayed at shorter intervals) than red and green lights.

“The problem with automated vehicles is they won’t be able to recognize yellow lights,” said Gonzalez. “It seems like a tiny problem, but it’s not, so we can simulate traffic lights with all those conditions – yellow, green, red – without issue. We are looking at those situations where it’s not very clear which traffic light is the one you should choose. Sometimes you have those blinking problems, those combinations of lights, and you’re not sure about it. If humans have problems, imagine an automated system that is not well trained.”

Thru signs, green arrows and road construction present a whole other set of issues. What happens if traffic shifts sides when a road is being resurfaced? How will an AV respond when it approaches a roundabout in an area that used to contain a four-way stop?

“There are so many situations,” Gonzalez warned. “Today we have the Teslas and other cars that can [perform well] as long as nothing strange is there. Construction zones, anything that is not standard, could make them fail – and that is very problematic. For humans it’s easy, but for machines we need to tell it what to do. We cannot just make a classic drawing of all that stuff and tell them, ‘If this happens, you can do this.’ We have to use machine learning to train with vast amounts of data.” Autonomous vehicles will also be at the mercy of human driving behaviors unless they learn to adapt while maintaining a greater degree of safety.

“For humans, there’s some level of aggressiveness,” Gonzalez explained, adding that human drivers will simply (if not dangerously) force their way into a lane. “That kind of behavior is very difficult to tell a machine because the machine is supposed to be very conservative. But if we just take the road as we are today, the machine will be so conservative it will be useless.” Gonzalez further detailed a situation in which multiple drivers approach the same area at the same time. “Those kinds of situations are [annoying for humans], but in the end we just push a little to let the other person know you want to pass,” he said. “There’s a lot of communication going on that humans understand. We need to put that kind of information into the machine. Otherwise it will just play the conservative approach and do nothing, and that’s useless for what [the auto industry is] trying to achieve.”

About the author:

Louis Bedigian is an experienced journalist and contributor to various automotive trade publications. He is a dynamic writer, editor and communications specialist with expertise in the areas of journalism, promotional copy, PR, research and social networking.